What it covers In this blog post we will see how we can use Google Cloud Platform’s Vision API to...

Running the spaCy Transformer Model in Lambda as a Container Image

What we are going to discuss

In this blog post, we will take a look at how container image support for Lambdas has made it an ideal choice to run Machine Learning models. Also, we will look at how we use the container image to run the spaCy Named Entity Recognition Model here at VISO.

When to use Lambdas

Lambda functions are triggered by an event or can be invoked from another function and run our code on a serverless compute service. Lambdas work very well in conjunction with other AWS Services such as triggering by modifications to objects in S3, a new message in SQS or another Lambda function.

Lambda runs your code on high availability compute infrastructure and performs all the administration of your compute resources. This includes server and operating system maintenance, capacity provisioning and automatic scaling, code and security patch deployment, and code monitoring and logging. All you need to do is supply the code. Source

Why did AWS add support for container packaging?

Before this change, the Lambda deployment package was a zip file. The zip file contained the code and any libraries or dependencies. The size limitation was 50 MB which restricted the Lambda usage.

Previously, we implemented the spaCy model by mounting it on Amazon Elastic File System and loading it from our Lambda function. This approach made it possible to load more data than the space available in Lambda’s/tmp (512MB). We still needed to install the spaCy package as a Lambda Layer or as part of the ZIP packaging. While this made it possible for us to implement the medium spaCy model (43 MB), we ran into issues with the spaCy Transformer model due to its large dependencies such as Pytorch.

In December 2020, AWS announced support for packaging AWS Lambda functions using container images. The new Lambda support allows us to package and deploy Lambda functions as container images of up to 10 GB. This allowed us to implement the larger spaCy Transformer model (438 MB) by packaging it inside the Docker image and get more accurate entity extractions.

How to run the spaCy Transformer model in container image

- On your local machine, create a project directory for your new Lambda function.

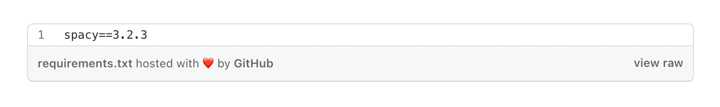

- Install dependencies

In your project directory, add a text file namedrequirements.txtand list each required library and its version as a separate line in it. In this case, specify the spaCy version you want to install. If the Lambda is using other libraries, they will be added to this file:

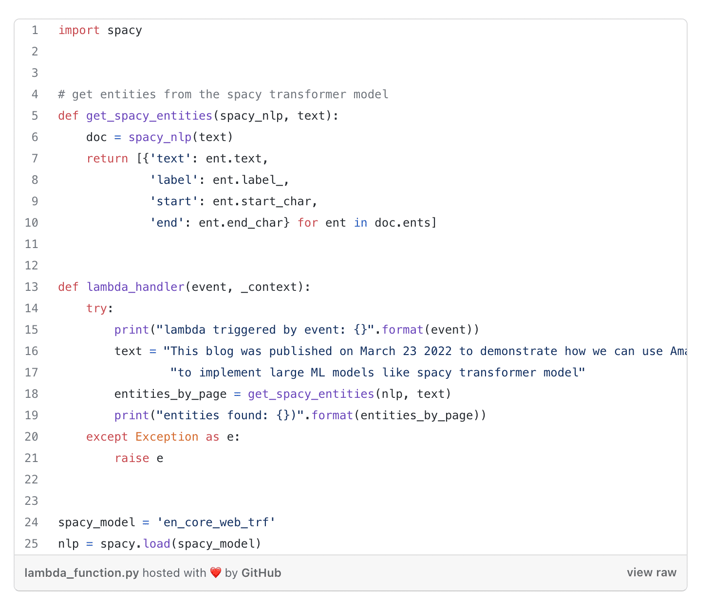

- Create a Lambda function

Now create a file namedlambda_function.pycontaining your function code. The following example shows a simple Lambda function where we load the spaCy Transformer model and run it on the text string. If the Lambda container image executes correctly we will see the extracted entities from text.

We can also run the model directly on documents by adding another step to extract text using tools like pdftotext, PyTesseract (instead of line 16) and then run the transformer model on the extracted text.

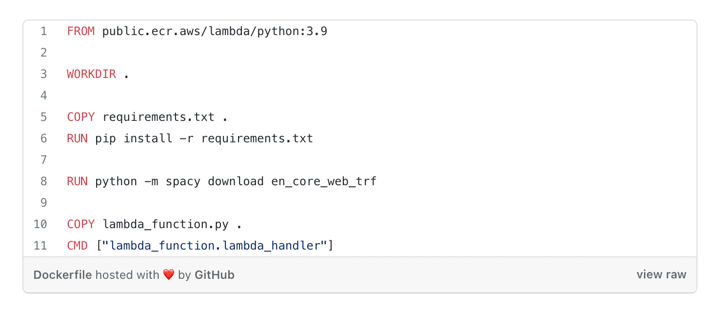

Create a Dockerfile

Use a text editor to create a Dockerfile in your project directory. The following example shows the Dockerfile for the handler we created in the previous step. In this Dockerfile we are using the AWS base image for python3.9. The benefit of doing this is:

These base images are preloaded with a language runtime and other components that are required to run the image on Lambda.

If you want to use your own base image, you must add a Runtime interface client to the base image to make it compatible with Lambda. In the Dockerfile we install our libraries from requirements.txt and the spaCy Transformer model. The Docker image should be around 3gb in size.

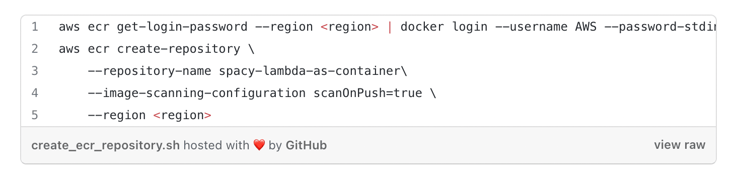

- Create a new ECR repository

Now that we have all our files ready, let’s create an ECR repository in AWS where we will upload our Docker image. We can use these cli commands (add your region and account id) to do that or create it from the console:

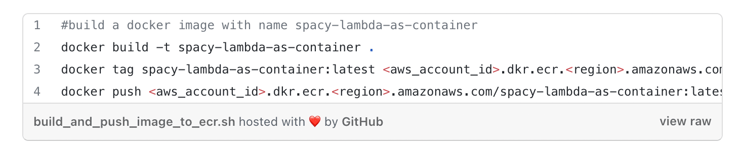

- Build and upload your Docker image to ECR repository

Once we have our repository created, we build the Docker image using the Dockerfile, tag it as latest so we grab the most recent image every time and push it to ECR.

- Create and test the container Lambda

Now the last step is to load the image in a AWS Lambda function

- Go to AWS console and select create function

- Select Container image

- Add a function name and click on browse image to select the repository name that we created above

- Select the image with latest tag

Great! now we have our container image based Lambda function. Because we are running a large spaCy model (remember our docker image size was around 4gb), go ahead and increase the memory and timeout assigned to the Lambda in general configuration.

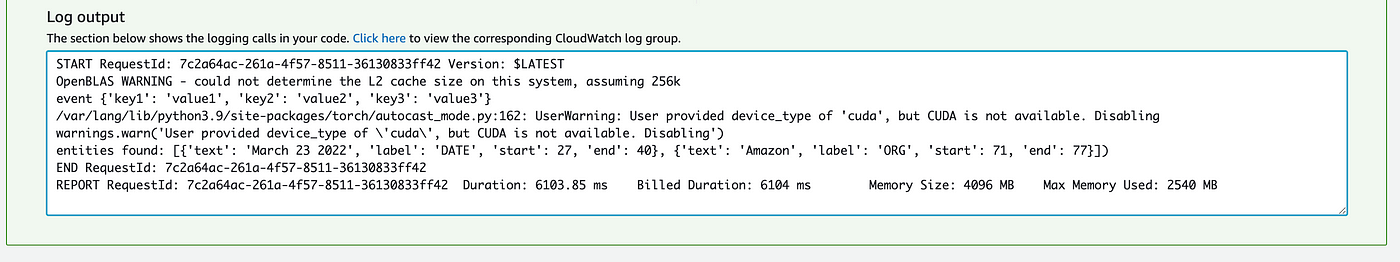

To see the entity output, let’s use a simple hello-world template because we are not using any information from the event trigger in this example.

Run the test and voila! you should see the spaCy results in logs:

Conclusions

In this blog I have shown the steps to run the spaCy Transformer model using AWS Lambda with its new container image support.

Similar approach can be used to implement other large ML models.

To learn more about the Container Image Support, check out the AWS documentation.